Practical Regex

This is not an introduction to regular expressions. If you are completely new to this topic, you can find many tutorials on the web, like the “Automate the boring Stuff” Regex chapter, the re module documentation, and many others. I can also recommend the book Mastering Regular Expressions, which is how I learned regex.

This is about how to structure patterns for a few use cases that I came across.

Replace sub-match

One example that I had to implement again recently was this:

I have a structured file (C source code in my case), and I need to change a variable (a version string). It might look like this:

# Usually comes from reading the file

source_code = '''

version_string = "1.2.3";

not_a_version = "2.3.4";

'''

The goal is to replace the “1.2.3” by the new version, i.e. “1.2.4”. The replacement must work without knowing the current version string (thus a regexp of “1\.2\.3” is not possible), and it must not replace similar-looking strings elsewhere (so not “\d+.\d+.\d+”, which would also change the value of not_a_version in the given example).

The most primitive approach I came up with is this:

import re

regex = r'^version_string = "[^"]+";$'

replacement = 'version_string = "1.2.4";'

print(re.sub(regex, replacement, source_code, flags=re.M))

version_string = "1.2.4";

not_a_version = "2.3.4";

Repeating the stuff surrounding the actual version string is not very elegant, and makes it harder to adapt to other uses (replacing different variable names). Instead, I could capture the surrounding stuff in a group and use it in the replacement:

regex = r'^(version_string = )"[^"]+(";)$'

replacement = r'\1"1.2.4\2'

print(re.sub(regex, replacement, source_code, flags=re.M))

version_string = "1.2.4";

not_a_version = "2.3.4";

Why do I have one of the quotes outside of my first capture group, only to literally include it in the replacement string, you might ask?

If I didn’t have the quote in my replacement string, it would read r’\11.2.4\2′, which is not “Use first capture group, followed by a literal digit one”, but “Use the eleventh capture group, followed by a dot”, and thus doesn’t work.

If anyone knows how to backreference to a capture group, and follow that reference by a digit, please let me know! (Putting parentheses or braces around the capture group number doesn’t work.)

Update: /u/kaihatsusha pointed out that the python documentation actually explains how to do this (referencing the first capture group in the replacement string can also be done via \g<1>):

regex = r'^(version_string = ")[^"]+(";)$'

replacement = r'\g<1>1.2.4\2'

print(re.sub(regex, replacement, source_code, flags=re.M))

version_string = "1.2.4";

not_a_version = "2.3.4";

Let’s see what else we can come up with. Recently (and embarassingly), I wrote this:

def replace_group(text='', n=1):

def f(match):

s, e = match.regs[0]

s1, e1 = match.regs[n]

return match.group(0)[:s1-s] + text + match.group(0)[e1-s:]

return f

re.sub also accepts a function in place of the replacement string. That function will get the match object as an argument, and its return value will be used as the replacement. replace_group returns such a function. It will take the complete match, and inside that match, only replace the nth group by text.

It is used like this:

regex = r'^version_string = "([^"]+)";$'

print(re.sub(regex, replace_group('1.2.4'), source_code, flags=re.M))

version_string = "1.2.4";

not_a_version = "2.3.4";

So now, only the part I actually want to replace is defined as a capture group. This feature of re.sub is quite powerful, but overkill for this purpose.

… Enter lookaround assertions! You can tell a regex to match something only if the text around it (before or after) either matches or doesn’t match a given regex (without making the prefix or suffix part of the match):

- (?=…): text is followed by …

- (?!…): text is not followed by …

- (?<=…): text is prefixed by …

- (?<!…): text is not prefixed by …

Here’s how to use it for the task at hand:

regex = r'(?<=^version_string = ")[^"]+(?=";$)'

replacement = '1.2.4'

print(re.sub(regex, replacement, source_code, flags=re.M))

version_string = "1.2.4";

not_a_version = "2.3.4";

This is the most elegant way in my opinion, as the replacement string is now exactly what should be inserted.

Takeaway: Know your tools (the re module in this case), and apply what suits the problem at hand! And by the way, the tool here is the re module, not regular expressions in general. There are other regex engines out there, and not all of them support the lookaround assertions. Emacs is one example of this, and the re2 module is another.

Search for key-value pairs of some form

Sometimes I want to parse text that is a representation of keys and values. A neat trick to get those into a python dict is to use the fact that re.findall returns a list of tuples if the regex contains capture groups. So if you have exactly two capture groups, the return value of re.findall can be passed directly to dict():

text = '''

a: 1

foo: bar

'''

regex = r'([^:\n]+)\s*:\s*(.*)'

dict(re.findall(regex, text))

{'a': '1', 'foo': 'bar'}

Parsing table-like stuff

For parsing tables of which you know the column names, you can use named capture groups in conjunction with `mo.groupdict()`. Also, building the regular expression dynamically is a good idea, as the short regex building blocks are easier to read than a single, long regex.

text = '''

one two three

spam spam spam

baked beans spam

'''

separator_regex = ' '

field_regex = fr'(?P<{{}}>[^{separator_regex}\n]+)'

colnames = ['col1', 'col2', 'col3']

regex = separator_regex.join(field_regex.format(x) for x in colnames)

[mo.groupdict() for mo in re.finditer(regex, text)]

[{'col1': 'one', 'col2': 'two', 'col3': 'three'}, {'col1': 'spam', 'col2': 'spam', 'col3': 'spam'}, {'col1': 'baked', 'col2': 'beans', 'col3': 'spam'}]

You’ll have to decide how the parsed data structure should look like. A list of lists (or tuples in this case) is the most generalized way to represent a table, and can be generated with mo.groups instead of mo.groupdict:

[mo.groups() for mo in re.finditer(regex, text)]

[('one', 'two', 'three'), ('spam', 'spam', 'spam'), ('baked', 'beans', 'spam')]

On top of this, you could improve expressiveness by using a namedtuple for the rows:

from collections import namedtuple

rowtype = namedtuple('row', colnames)

[rowtype._make(mo.groups()) for mo in re.finditer(regex, text)]

[row(col1='one', col2='two', col3='three'), row(col1='spam', col2='spam', col3='spam'), row(col1='baked', col2='beans', col3='spam')]

You can see the advantage of actually having a list of column names instead of a single regex with hardcoded capture group names, as the former can be used for creating the row type.

Maybe your particular use case would require the first cell of each row to be a dict key for getting the other cells. Then, a short dict comprehension is helpful:

{x[0]: x[1:] for x in re.findall(regex, text)}

{'one': ('two', 'three'), 'spam': ('spam', 'spam'), 'baked': ('beans', 'spam')}

Now that we have covered tables as a list of lists, a list of dicts, and a dict of lists (ok, tuples…), all that remains is creating a dict of dicts:

{x[0]: dict(zip(colnames[1:], x[1:])) for x in re.findall(regex, text)}

{'one': {'col2': 'two', 'col3': 'three'}, 'spam': {'col2': 'spam', 'col3': 'spam'}, 'baked': {'col2': 'beans', 'col3': 'spam'}}

If one of these ways of parsing your data is the best one, depends a lot on the kind of data you have. If the input format resembles csv, I would suggest using the csv module. This is especially true once arbitrary quoted strings are encountered, since those might contain the string that normally separates the fields. And if third-party libraries can be used, pandas‘ read_csv function is definitely worth a look.

That’s it for this post!

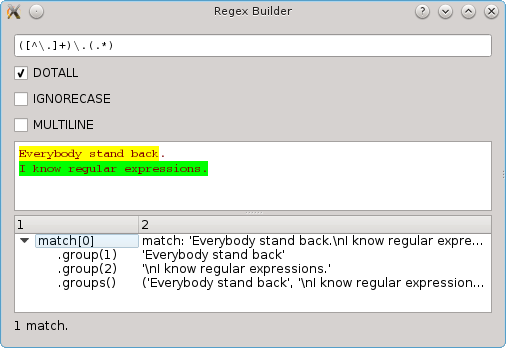

By the way, if you sometimes need visual help when building a regular expression, take a look at my regex builder. I also know about https://pythex.org/, but this does not highlight capture groups.

Comments